Model accuracy has emerged as a critical factor as models and datasets grow requiring unprecedented levels of cost and energy while current AI infrastructures are suffering from resource underutilisation due to their reliance on GPUs without considering the diverse resource demands of different AI workloads.

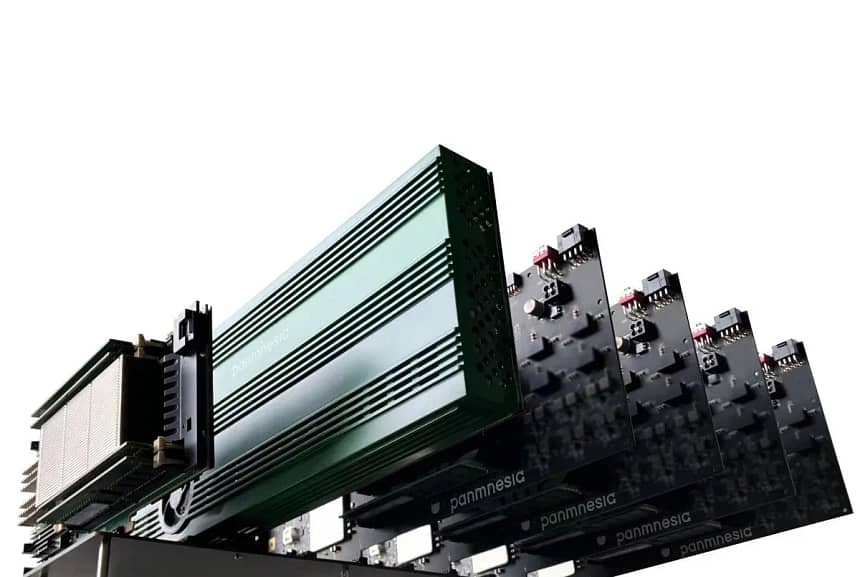

Panmnesia aims to address these challenges with its chiplet-based AI accelerator. The accelerator can be configured in a flexible manner based on demands, and it also incorporates in-memory processing technology to minimise data movement.

These components will be integrated into Panmnesia’s proprietary CXL full-system solution.

M

Features:

1. Reusable and Flexible Chiplet-based Architecture

Panmnesia’s AI accelerator enables flexible scaling of compute and memory resources by placing compute and memory tiles chiplets based on the demands of AI workloads, resulting in optimised resource utilization.

The modular design allows for faster development cycles through partial SoC modification—only the chiplets related to the required functionality need to be updated, while the rest can be reused without any modification. This significantly reduces development time, facilitating rapid adaptation to evolving industry trends.

2. Manycore Architecture and Vector Processor

Panmnesia’s AI accelerator features two types of compute chiplets: (1) chiplets with manycore architecture (called ‘Core Tiles’) and (2) chiplets with vector processors (called ‘PE Tiles’).

Customers can optimise performance per watt, by choosing appropriate compute chiplet architecture for their target workload. For instance, Core Tiles (chiplets with manycore architecture) are well-suited for accelerating highly parallel tasks exploiting its massive parallelism, while PE Tiles (chiplets with vector processors) are specialized for operations involving vector data formats, such as distance calculations.

Moreover, it is able to integrate over a thousand cores within a chip, since the company is going to leverage advanced semiconductor process nodes to design its SoCs. Therefore, it is able to meet the massive computational demands of modern AI applications efficiently.

3. In-Memory Processing

Data movement between memory resources and computational resources is one of the significant causes of power consumption. To solve this power issue, Panmnesia incorporates in-memory processing technologies to remove unnecessary data movement within AI infrastructure. This leads to significant reductions in power consumption associated with data transfers that occur during the processing of large-scale AI workloads.

Panmnesia also integrates its proprietary CXL IP into the system. As resource pooling based on CXL enables on-demand expansion of computing and memory resources, customers can optimise the cost of their AI infrastructure.