- Google and the Wild Dolphin Project have developed an AI model trained to understand dolphin vocalizations

- DolphinGemma can run directly on Pixel smartphones

- It will be open-sourced this summer

For most of human history, our relationship with dolphins has been a one-sided conversation: we talk, they squeak, and we nod like we understand each other before tossing them a fish. But now, Google has a plan to use AI to bridge that divide. Working with Georgia Tech and the Wild Dolphin Project (WDP), Google has created DolphinGemma, a new AI model trained to understand and even generate dolphin chatter.

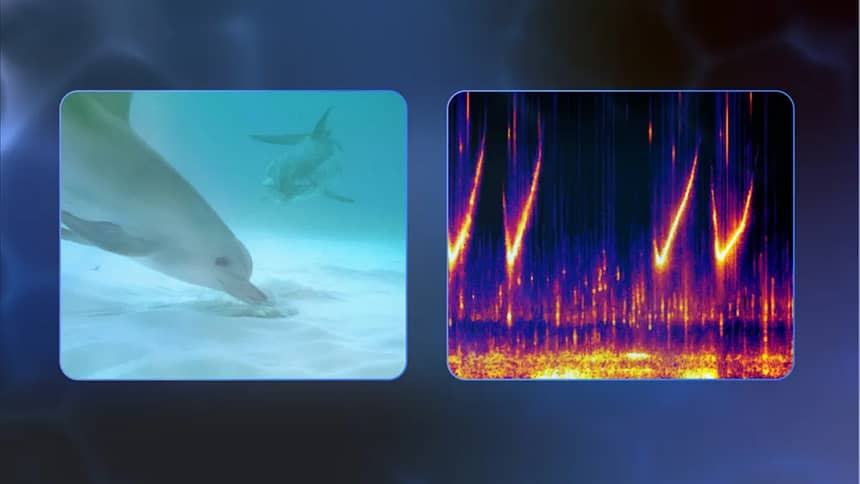

The WDP has been collecting data on a specific group of wild Atlantic spotted dolphins since 1985. The Bahamas-based pod has provided huge amounts of audio, video, and behavioral notes as the researchers have observed them, documenting every squawk and buzz and trying to piece together what it all means. This treasure trove of audio is now being fed into DolphinGemma, which is based on Google’s open Gemma family of models. DolphinGemma takes dolphin sounds as input, processes them using audio tokenizers like SoundStream, and predicts what vocalization might come next. Imagine autocomplete, but for dolphins.

The model is very slim and can run on a Google Pixel. WDP is already deploying DolphinGemma in the field this summer, using Pixel 9s in waterproof rigs. These models will be listening in, identifying vocal patterns, and helping researchers flag meaningful sequences in real time.

Flipper speaks

But the ultimate goal here isn’t just passive listening. WDP and Georgia Tech are also working on a system called CHAT (short for Cetacean Hearing Augmentation Telemetry), which is essentially a two-way communication system for humans and dolphins. CHAT lets researchers assign synthetic whistles to objects dolphins like, including seagrass and floating scarves, and then waits to see if the dolphins mimic those sounds to request them. It’s kind of like inventing a shared language, except with underwater microphones instead of flashcards.

DolphinGemma doesn’t just analyze dolphin sounds after the fact; it helps anticipate what sounds might be coming, enabling faster response times and smoother interactions. In essence, it’s like a predictive keyboard for dolphins. The whole project is still in an early stage, but Google plans to open-source DolphinGemma later this year to accelerate progress.

The initial model is trained on the vocalizations of Atlantic spotted dolphins, but it could theoretically be adapted to other species with some tuning. The idea is to hand other researchers the keys to the AI so they can apply it to their own acoustic datasets. Of course, this is still a long way from chatting with dolphins about philosophy or their favorite snacks. There’s no guarantee that dolphin vocalizations map neatly to human-like language. But DolphinGemma will help sift through years of audio for meaningful patterns.

Dolphins aren’t the only animals humans may use AI to communicate with. Another group of scientists developed an AI algorithm to decode pigs’ emotions based on their grunts, squeals, and snuffles to help farmers understand their emotional and physical health. Dolphins are undeniably more charismatic, though. Who knows, maybe someday you’ll be able to ask a dolphin for directions while you’re sailing, at least if you don’t drop your phone in the water.