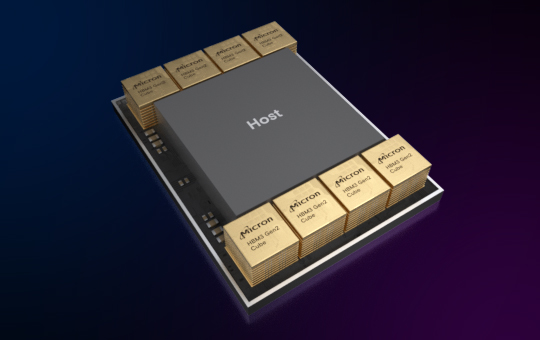

It is built on Micron’s 1β (1-beta) DRAM process node, which allows a 24Gb DRAM die to be assembled into an 8-high cube within an industry-standard package dimension.

A 12-high stack with 36GB capacity will begin sampling in Q1 2024.

The device’s performance-to-power ratio and pin speed improvements meet the extreme power demands of AI data centres.

The improved power efficiency is possible because of advances such as doubling the through-silicon vias (TSVs), thermal impedance reduction through a five-time increase in metal density, and an energy-efficient data path design.

Micron is a partner in TSMC’s 3DFabric Alliance to help shape the future of semiconductor and system innovations.

As part of the HBM3 Gen2 product development effort, the collaboration between Micron and TSMC lays the foundation for a smooth introduction and integration in compute systems for AI and HPC design applications.

TSMC has received samples of Micron’s HBM3 Gen2 memory and is working with Micron for further evaluation and tests for the next-generation HPC application.

The Micron HBM3 Gen2 solution addresses increasing demands in the world of generative AI for multimodal, multitrillion-parameter AI models. With 24GB of capacity per cube and more than 9.2Gb/s of pin speed, the training time for large language models is reduced by more than 30% and results in lower TCO.

Micron’s offering unlocks a significant increase in queries per day, enabling trained models to be used more efficiently. Micron HBM3 Gen2 memory’s performance per watt drives data centre cost savings:

For an installation of 10 million GPUs, every five watts of power savings per HBM cube is estimated to save operational expenses of up to $550 million over five years.